A plain person's guide to Secure Sockets Layer

This document attempts to describe Secure Sockets Layer, or SSL, in

simple terms. A full explanation is a daunting task, as SSL depends on

several layers of complex software and some mathematical concepts, but

once these are grasped, SSL itself is not too bad!

The first thing you need to know about Secure Sockets Layer is that it is

no longer called that. For purist reasons, the protocol formerly known as

SSL is now called Transport Layer Security, or TLS. The reasons for this

name change are fairly esoteric and originate partly in a description of

networking architecture as the Open

Systems Interconnection seven-layer networking model. SSL hovered

uncomfortably between the transport layer (4) and the presentation layer

(6), and some experts felt, long after the OSI model had fallen out of

fashion, that SSL was not really a layer

at all. In the more

recent TCP/IP

model, which has fewer layers, SSL operates somewhere between the

transport and application layers. Also, the protocol can — in

principle — be applied to other networking protocols than just sockets

,

even though the vast majority of global communication are now made using

the socket programming interface.

So, just at the time when the terms SSL and Secure Sockets Layer were

becoming familiar to the general public as the secure networking standard

that all Internet services should aspire to, the guardians of the

protocols decreed that it should be renamed, to the confusion of all. The

same purist mentality led to the emerging popular term of URL being

replaced by URI (a general term that incorporates both URNs and URLs).

In spite of the name change, TLS is not vastly different from SSL.

Internally, messages contain an SSL ProtocolVersion number

within them. This was 0200 for the original Version 2 of SSL, and 0300 for

SSL Version 3. (SSL Version 1 was so insecure that it was never released.)

When TLS Version 1 was introduced, the internal protocol version number

was only increased to 0301. TLS V1.1 became 0302, and TLS1.2 became 0303,

and TLS1.3 will probably be 0304.

Almost every product that implements TLS continues to refer to it as SSL,

usually with some weasel words added like more properly known as TLS

.

Some mathematics you might need

I know most people are turned off by mathematics, but before I can

explain some of the cryptography terms, I need to introduce a few

mathematical concepts. Many of these may be familiar, but I will restate

them anyway as a reminder.

- Integer

- This is the mathematicians' posh name for a whole number; that is, a

number with no fractional part.

- Composite number

- When several integers are multiplied together to produce another

number, the result is called a composite number. Some examples are:

2×3=6, 12×5=60.

- Factors of a number

- The integers that can be multiplied together to produce a number are

called its factors. For many numbers, several different factors can form

the number in different ways. For example, 60 = 60×1 = 30×2 =

20×3 = 15×4 = 12×5 = 10×6 = 5×6×2 =

5×4×3 = 5×3×2×2. So the factors of 60 are: 60,

30, 20, 15, 12, 10, 6, 5, 4, 3, 2, 1.

- Prime number

- A number whose only factors are itself and 1 is called a prime number.

By convention, 0 and 1 are neither

prime nor composite.

- Prime factors

- The factors that can be multiplied together to produce a number, but

which are not, themselves, composite. So the prime factors of 60 are

just 5, 3, 2.

- Prime factorisation

- This is the process of discovering the prime factors of a number. If

the process does not find any prime factors, the number is a prime

number.

- Co-prime numbers

- Two number are co-prime if they do not share any factors (except 1).

So 60 is co-prime with 77 because none of the factors of 60 (5, 3, and

2) are equal to those of 77 (11 and 7).

- Powers and exponents

- When the same number is multiplied by itself several times, the

process is called raising that number to a power. For example

2×2×2×2 is called

two raised to the fourth power

,

or just the fourth power of two

. It is written in shorthand form

as 24. The power to which the number is raised is called its

exponent. So 4 is the exponent of 2 in this expression. A

number raised to the power of one is just the number itself, and a

number raised to the power of zero is always equal to one: so 21

= 2, and 20 = 1.

- Binary notation

- Any number can be represented as the sum of a list of powers of 2. For

example 13 = 8 + 4 + 1 = 23 + 22 + 20.

A binary number is a representation of a number by 1s and 0s, where a 1

represents a power of two that is actually present in the sum, and a

zero represents a power of two that is absent, when the powers are

listed in decreasing size. So, again, 13 = 1×23 +

1×22 + 0×21 + 1×20. To

form the binary notation, the power expressions and the plus signs are

removed, leaving only the 0s and 1s. So this example is written as 1101.

The ones and zeroes in such a number are called binary digits,

an expression that is almost always shortened to bits.

- Hexadecimal notation

- For all but very small numbers, the binary representation of a number

is very long-winded, so hexadecimal notation is used instead. Each

number is represented as the sum of a list of powers of sixteen. For

example 2439 = 9×162 + 8×161 + 7×160,

and 2748 = 10×162 + 11×161 + 12×160.

Just as in the binary notation, the power expressions and the plus signs

are then removed. But since the two-digit multipliers 10, 11, 12, 13,

14, 15 would lead to ambiguous number forms, they are replaced by the

letters A,B,C,D,E,F (in upper or lower case). So the hexadecimal

representations of the two example numbers are 2439 = 987x, and 2748 =

ABCx (where the x suffix denotes a hexadecimal number†).

There is an easy conversion between binary notation and hexadecimal

notation, as four bits map into a single hexadecimal digit, so every

eight-bit byte can be mapped by a pair of hexadecimal digits.

- †In this document, I am not using the

C-language notation for hexadecimal (0xhhhh, where h is any

hexadecimal digit). I find it extremely clumsy, and it gives the wrong

impression that the hexadecimal notation can only be

specified in this form. There are actually several different ways of

annotating hexadecimal used in different programming languages, and

the one used in C and its descendants is, in my opinion, one of the

most obtuse. The strict mathematical notation is to use the base 16 as

a subscript, as in hhhh16, but this is somewhat more

difficult to type and to read.

- Modular arithmetic

- Modular arithmetic is the arithmetic of a finite number of integers

less than a certain fixed

number, called the modulus. An expression in modular arithmetic is

followed by the abbreviation mod, followed by

the modulus itself. mod is an abbreviation

for the the Latin word modulo, which is the ablative

case of modulus, meaning

with the modulus

.

As an example of its use, with a modulus of 12, is: 7+8 ≡ 3 (mod 12).

Notice that the usual equals sign is not used.

The ≡ sign, with three bars, means is congruent to

,

with the specific meaning of equality within modular arithmetic. In

modular arithmetic, the modulus is congruent to zero. In the example, 7

and 8 sum to 15, but because this exceeds the modulus 12, the excess 12

is subtracted from 15 to leave 3. Probably the most familiar example of

modular arithmetic is the 12-hour clock: when you add eight hours to

seven o'clock, you get three o'clock, because the hours wrap around at

twelve o'clock. Hours start at 12 o'clock, so the modulus 12 is also the

zero.

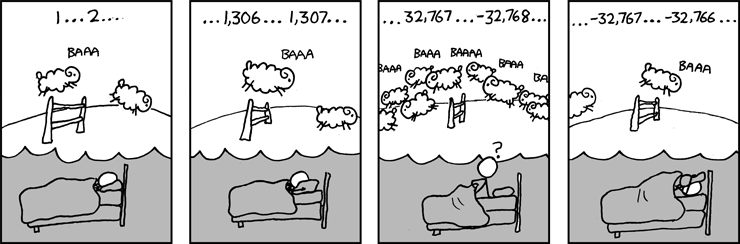

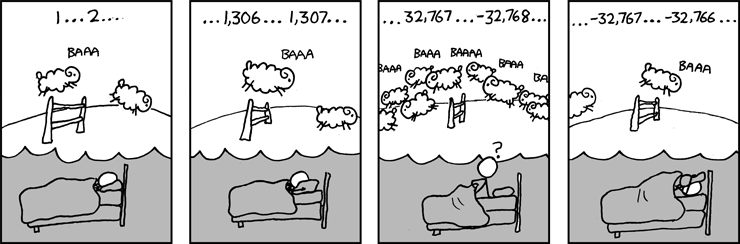

Modular arithmetic is very important in computing because integers

are usually represented in a computer by a memory location of fixed

size, called a word. In all but the most modern computers, the word

size is usually 32 bits. Only integers up to 232−1

(4,294,967,295) can be represented in a 32-bit word. (In older

computers, the word size could be eight or sixteen bits. In very

modern computers, the word size is 64 bits, and numbers up to 264−1

(18,446,744,073,709,551,615) can be represented.) By convention,

numbers in modular arithmetic are always positive. But, in a computer,

numbers with a high-order bit set to one are frequently treated as

negative, so the range of signed integers that can be represented in a

32-bit word is −231 to 231−1

(−2,147,483,648 to 2,147,483,647) or, in a 16-bit word, as −215 to 215−1 (−32,768 to 32,767), which is the point of the comic above. Notice that the sign

convention does not affect the binary (or hexadecimal) value of the

number in computer memory: the bit pattern for −1 is identical

to that for 4,294,967,295 and is FFFFFFFFx. When +1 is added to this

number, the result is zero in both cases, because modular arithmetic

is used:

-1 + 1 ≡ 0 (mod 4294967296)

4294967295 + 1 ≡ 0 (mod 4294967296)

- Primitive root

- In a set of integers using modular arithmetic, a number is a primitive

root if every number co-prime to the modulus is equal to some power of

that root. That is, r is a primitive root if, for every p

that is co-prime to n, there is an integer i

such that ri ≡ p (mod

n).

- Elliptic Curve

- An elliptic curve function is a relationship between x and

y of the form y2 = x3 + ax + b.

This is not the equation of an ellipse.

The term arose

from the study of elliptic integrals, whose name arose because

they were originally used to

calculate

the arc length of an ellipse.

Some cryptography terms

The SSL architecture depends heavily on three types of computer

cryptography. These are:

- Digest cryptography

- in which a message is irreversibly scrambled and shortened. No key is

required.

- Symmetric-key cryptography

- In which a single key is used to encrypt and decrypt a message.

- Asymmetric-key cryptography

- In which a pair of keys are used to encrypt and decrypt a message. The

two keys are related in such a way that a message encrypted with one of

them can only be decrypted with the other.

Digest and Asymmetric-key cryptography can be combined to produce digital

signatures.

Digest cryptography

At first, it seems like the irreversible digest cryptography would be

fairly useless. But it is extremely powerful in validating the integrity

of a message: that is, whether a message has been correctly preserved

during processing.

The following examples use one of the simplest digest methods, known as

MD5:

MD5(Jack and Jill went up the hill.) = FDA1D3E3B885E20D8B53490CA945F4B0x

MD5(Jack and Jill went up the hall.) = FF6624FC67385074B7D97233609CE839x

MD5(Pay the bearer the sum of $1000.) = 833E3213A371D8E50CC5335C9197B37Ex

MD5(Pay the bearer the sum of $9000.) = 677A105D4E03DE53D4307E689455B069x

Note that, whatever the length of the message being digested, the MD5

result is always exactly 32 hexadecimal digits, which is 16 bytes, or 128

bits. Furthermore, when a message is modified with only a tiny change

– even just a single bit – its digest changes dramatically.

This is because the internal calculations are actively encouraged to

overflow the normal capacity of a 32-bit computer word, so that some data

bits are deliberately lost. Because of this data loss, the original

message is irretrievably lost, and cannot be recovered from the digest.

A message digest is also known as a hash. More modern digest algorithms

are SHA-1, SHA-2,

and the proposed SHA-3,

where SHA means Secure Hash Algorithm. The MD5 and SHA-1 algorithms are

nowadays regarded as inadequate for use in secure systems.

Symmetric-key cryptography

Symmetric-key cryptography is probably the most familiar form of

encryption. An encryption algorithm applies an encryption key

to a message containing plain text , and produces a message

containing cipher text. Later, an opposite decryption

algorithm applies the same key to the cipher text to recover the

plain text. The algorithm typically rotates and manipulates the bits of

the plain text in a predictable but difficult-to-reverse way, using the

content of the encryption key, but none of the data is lost.

One of the first commonly used symmetric-key computer algorithms was the

Data Encryption Standard (DES), which was adopted in 1977. This requires

an eight-byte secret key, but only 7 bits in each byte are used, so it is

referred to as a 56-bit key. This implies that there are only

256

(about 72 quadrillion, or 72×1015) different DES keys,

which in principle could be used to brute-force

the decryption of

a cipher text. With modern computing power, this is not totally

infeasible, so that increased key sizes and more complex algorithms have

been introduced. Examples of these: are Triple DES (3DES), which applies

the DES algorithm three times, with three different keys, so increasing

the nominal key size to 168 bits; and the Advanced Encryption Standard

(AES), which uses key sizes of 128, 192 or 256 bits. Newer encryption

algorithms include ARIA (Korea), Camellia (Japan), SEED (Korea), and GOST

(Russia).

Asymmetric-key cryptography

Asymmetric-key cryptography is really the miracle technology that makes

SSL work. It is also known as public-key encryption. In principle, a pair

of digital keys are used, known as the public key and the private key. A

message encrypted with the private key can only be decrypted

with the public key, and a message encrypted with the public key can only

be decrypted with the private key. It seems that the public and private

keys are therefore interchangeable (so long as one of them is kept

secret).

In practice, the situation is somewhat different. The primary set of algorithms

that make public-key encryption work are known as the

Rivest, Shamir, and Adleman algorithms, also known as RSA. The mathematical

principle is based on the difficulty of factorising very large numbers

into their constituent prime factors.

The algorithm to choose a public key/private key pair is the following:

- Choose two large but different prime numbers, p and q.

- Calculate the product n of these two primes n =

p×q.

- Calculate the value φ equal to (p−1)×(q−1).

This is a count of the numbers less than n that do not share

a common factor with n, and is known as the

Euler totient function of n.

- Choose an integer e between 1 and φ

that is co-prime with φ: that is, such that e

and φ have no common factors.

- Determine the number d such that d×e ≡ 1

(mod φ); that is, the number d

such that the product d×e gives the remainder 1

when divided by φ.

Now the public key consists of the two numbers n and

e, and the private key consist of the two numbers n

and d. The number n is known as the modulus

for this key pair, d is known as the decryption exponent, or

the private exponent, and e is known as the

encryption exponent, or the public exponent. The size of the

key is usually counted as the number of bits of the modulus n.

In the original

RSA paper, the algorithm suggests choosing the private exponent d

first, and then calculating e from it, but modern practice

reverses this. In fact, the public exponent e is usually fixed

as 65537, which is 216+1, the largest

Fermat Prime, also called F4. This, of course, means that the

private and public keys are not interchangeable.

To perform encryption of a message, the message itself is regarded as a

binary integer, m. (In the end, all binary strings are

actually very large binary numbers.) The encrypted cipher text is then

equal to c ≡ me (mod n),

which uses the public key numbers n and e. To

perform decryption of this cipher text and regain the plain text,

calculate m ≡ cd (mod

n). This can only be done by the recipient, who knows the private

exponent d. The value of d can only be deduced

from e by factorising n (into p and q).

It is the difficulty of this task that is the strength of the RSA

algorithm.

The calculation of the exponents me and cd

is computationally expensive compared to symmetric-key encryption.

How to share encryption keys

Symmetric-key cryptography is by far the most efficient way of encrypting large amounts of data.

But it shows up a major problem.

To use it, both partners in the conversation must know a single shared secret key.

And how do you share a secret key with a partner that you have never communicated with before?

This is known as the key exchange problem.

An early solution to this problem was for a trusted courier to carry the key,

physically locked in a secure container, from one location to another.

This is highly secure, but expensive and inconvenient, and hardly practical for electronic commerce.

Furthermore, the same key is used for encrypting all traffic, which somewhat simplifies the possibility

of an attacker breaking the key.

The solution to the key exchange problem lies in asymmetric-key cryptography.

Although it is computationally expensive, it only needs to be used briefly to exchange a key between partners.

Once the key has been exchanged securely, it can be used in a much more efficient symmetric-key algorithm

to encrypt the main data traffic, known as the bulk data transfer.

RSA key exchange

The RSA asymmetric key algorithm can be used to perform a key exchange.

One of the partners in the conversation (usually identified as the client) uses a random number generator

to create a secret key. The client then uses the public key of the other partner

(usually identified as the server) to encrypt the secret key.

By the properties of asymmetric encryption, only the server who knows the corresponding private key can decrypt the secret.

The random number created by the client thus become a shared secret

that is known only by the client and the server.

As we shall see later, SSL uses a slightly more sophisticated way to obtain a shared secret,

but the principle is essentially the same.

In the earliest version of SSL, RSA was the only key exchange mechanism that was used.

A problem with the simple key exchange using RSA is that the same key is

used for both authorization and encryption.

If a private key is ever compromised in the future, then historical messages encrypted with it can

be decrypted, possibly years later, if a copy of the encrypted conversation had been preserved at the time.

The solution to this problem is known as forward secrecy.

Diffie-Hellman key exchange

A technique that can be used to solve the forward secrecy problem is the

Ephemeral Diffie-Hellman key exchange.

The Diffie-Hellman key exchange occurs in two exchanges:

- Before exchanging the secret key, the client and server agree on a

prime modulus p and a primitive root g (mod

p). In this context, g is called the generator.

- The client creates a private key a, calculates A

≡ ga (mod p), and sends it

to the server.

- The server creates a private key b, calculates B

≡ gb (mod p), and

sends it back to the client.

- The client calculates the shared secret key from s ≡ Ba

(mod p).

- The server calculates the shared secret key from s ≡ Ab

(mod p).

The shared secret key s ≡ gab(mod

p) is derived mutually by the client and the server, and is not

known in advance by either of them. s cannot be derived from

either ga or gb,

even when g and p are known, unless the opposite

private key is known, provided that p is large enough. Since

the generator and the prime are not secret, suitable values have been

published (in RFC2409 and

RFC3526). As the private

numbers a and b are not fixed in a certificate, like

the RSA private keys, they can be created dynamically on a session by

session basis. They are therefore called ephemeral keys, and the process is

known as the Ephemeral Diffie-Hellman key exchange. Since the secret keys a

and b are different for every connection, and they are never

exposed on the connection, they can never be recreated from a historical

trace file, so the forward secrecy problem is solved.

Elliptic curve key exchange

A more complex form of public-key cryptography that can be use for key exchange is Elliptic Curve

cryptography, which is described in

A (Relatively Easy To Understand) Primer on Elliptic Curve Cryptography

by Nick Sullivan. A more comprehensive but much more difficult description

is at Standards for Efficient

Cryptography 1 (SEC 1): Elliptic Curve Cryptography.

A fairly accessible mathematical discussion is

An Introduction to the Theory of Elliptic Curves by Joseph H. Silverman.

I have also written a Java applet which

visualises elliptic

curves geometrically. The documentation with the applet also gives my simplified overview of the

mathematical basis for elliptical curve cryptography.

An elliptic curve is generated by the cubic equation y2 = x3

+ ax + b. This curve has an interesting property that (with a few exceptions)

any straight line that intersects it in two points also intersects it in exactly one other point.

The three collinear points can be used to define a mathematical group addition property.

If the three points where the line meets the curve are P, Q, and R,

the group property + is defined such that P + Q + R = 0.

This allows the definition of another point −R, equal to P + Q,

which is the reflection of R in the x-axis.

This process defines the addition

of two points to produce a third.

This process can then be applied over and over to produce a fourth,

fifth, sixth point, etc. Furthermore, the process can be kicked off from a single point on the

curve by drawing a tangent at the first point to generate the second point. The

number of iterations to get from a specified initial point to another

identified point is, in general, extremely difficult to calculate, and

this fact is used as the basis for elliptic-curve cryptography.

The problem is called the elliptic curve discrete logarithm problem.

Although elliptic curves are usually defined using real numbers, for

cryptography the elliptic curves are defined over a finite set of

integers, using modular arithmetic with a prime modulus, p.

The curve

is therefore defined as:

y2 = x3 + ax + b (mod

p)

Because only integer coordinates are used, the curves actually consist of a finite

set of disconnected points. This is only possible for certain values of a,

b, and p. In practice, rather than inventing

elliptic curves with arbitrary parameters, a number of standard curves are

used, such as those described at Recommended

Elliptic Curve Domain Parameters.

Digital signatures

A combination of digest encryption and public-key encryption can be used

to produce an unforgeable digital signature. The sender first creates a

digest of the document to be signed – a piece of plain text –

using one of the cryptographic digest algorithms. Then the digest (only)

is encrypted with the sender's private key. The result is the digital

signature. When the recipient receives the plain text and the signature,

he or she must recreate the digest of the plain text, using the identical

algorithm. They must then decrypt the signature using the public key of

the sender, to recover the sender's version of the digest. If both

versions of the digest match, the signature is validated. The fact that

the public key successfully decrypts the signature proves that it must

have been encrypted by the sender. The fact that the decrypted digest

matches the receiver's recalculated digest proves that the plain text has

not been altered since it was signed.

Note that encryption with the private key does not produce secret

cipher text, because anyone who knows the public key can decrypt it. But

it does assure anyone who does the decryption that the cipher text was

created by the owner of the private key.

Legal issues concerning encryption

For many years, software implementing strong encryption was regarded as a

munition, and the export of software or hardware containing it from the USA was

subject to extreme controls.

Weaker encryption, using shorter keys or weaker algorithms, was graciously permitted to be

exported from the USA, and so was known as export grade

encryption.

This is of course a very US-centric view: anything that is exported from the USA is imported by the other county;

so every other country in the world should have called it import grade

.

In France, the implementation of even export

grade encryption was subject to government scrutiny,

and a special licence was required to use software that used keys longer than 40 bits.

The French government removed this requirement in 1999.

This situation led to a couple of articles in the 1990s ridiculing the customs

requirements of hand-carrying computers that contain encryption

software. Read My

Life as an International Arms Courier, by Matt Blaze, and

My

life as a Kiwi arms courier, by Peter Gutmann.

The US regulations were effectively removed in 2001, although it is still

illegal to export encryption products from the USA to certain embargoed countries (the

usual suspects). The current state of international cryptography law is maintained in the

Crypto Law Survey.

Nevertheless, governments are deeply suspicious of citizens wishing to use encryption that prevents

them being spied on, and as recently as January 2015 the British government has proposed that

encryption products should become unavailable to the general public.

Building portable binary objects: ASN.1, BER, and DER encoding

It is already evident that for objects like the public and private keys,

which are pairs of binary numbers, some structure needs to be imposed

before they can be used in real-word computer systems. For instance, we

need to know how long the numbers n, d, and e

are, and in what order they should be stored. (They cannot be simply

concatenated as a continuous bit string, as it would then be impossible to

know where one number begins and the other ends.

To represent the abstract concepts such as numbers and ordered pairs is a

way that can be easily represented in a computing system, a notation known

as Abstract Notation One (ASN.1) was developed in the 1980s. It is

described completely in John Larmouth's book

ASN.1 Complete. The abstract notation itself does not

suggest or require any particular binary representation: as it name

implies, it is totally abstract. Instead, the binary representations of

such abstract concepts are described in a number of encoding rules. The

two that are relevant in this discussion of SSL are the basic encoding

rules (BER), and the distinguished encoding rules (DER).

ASN.1 can be regarded as a programming language for describing the layout

of complex data structures. It contains some built-in data types, which

can be combined to produce more specific custom-defined types. The

built-in types are: BOOLEAN, INTEGER, ENUMERATED, REAL, BIT STRING, OCTET

STRING, NULL, OBJECT IDENTIFIER. Other extended data types such as

UTF8String, UTCTime, and GeneralizedTime are also defined. Data types can

be composed together using the connectors SEQUENCE, SEQUENCE OF, SET, SET

OF, or CHOICE. A SEQUENCE or SET is collection of elements of different

types, whereas a SEQUENCE OF or SET OF is a collection of elements of the

same type. Just as in mathematics, the elements in a SET or SET OF do not

have any particular order, so they are not very useful for creating real

structures, and are never used. CHOICE is equivalent to a union

in other programming languages: only one of the elements actually appears

in the final structure.

The OBJECT IDENTIFIER type

An object identifier is a general-purpose way of identifying a type

within an international

hierarchical global directory of object types. Each object type is

identified by a sequence of integers, where each integer is notionally

associated with a node in a huge tree containing close to a million

entries. The lowest three nodes in the tree are associated with the

organisations that coordinate the type definitions, which are ISO (the

International Standards Organisation) and ITU-T (the International

Telegraph Union Telecommunication Standardization Sector). Each of these

organisations have their own hierarchy of definitions in the tree, and

there is also a hierarchy of joint definitions, for which responsibility

is shared between the two groups. The three base nodes are therefore known

as itu-t (0), iso (1), and joint-iso-itu-t (2).

When the ASN.1 architecture was first being defined, the ITU-T was known

as the CCITT (Comité Consultatif International

Téléphonique et Télégraphique, or International

Telegraph and Telephone Consultative Committee), so these nodes were

originally known as ccitt (0), iso (1), and joint-iso-ccitt

(2).

The graph at the right shows just a few of the entries in the

object-identifier tree. The path from the root to any of the nodes in the

tree is called an arc.

Notice the node labelled internet (1) below the 1.3.6 dod

arc. The notes on this

entry say OID 1.3.6.1 was hijacked by the Internet

community in IETF RFC

1065, by Marshall Rose and K. McCloghrie. The list of subsequent

nodes is based on IETF RFC

1155. The authoritative reference on the rest is the Internet

Assigned Numbers document, currently IETF RFC

1700

. So "the Internet" does not actually belong to the

Department of Defense! In fact, RFC1065 states:

As of this writing,

the DoD has not indicated how it will manage its subtree of OBJECT

IDENTIFIERs. This memo assumes that DoD will allocate a node to the

Internet community, to be administered by the Internet Activities Board

(IAB) as follows:

internet OBJECT IDENTIFIER ::= { iso org(3) dod(6) 1 }

The 1.3.6.1.4.1 arc leads to sections for private corporations, where

they can register their own private object types.

OIDs can also be registered at a national level under arc 2.16.

See Operation

of a country Registration Authority. The registration for X.509

certificates is at OIDs

for X.509 Certificate Library Modules. (not at 1.3.6.1.4.1

...)

See notes at http://oid-info.com/get/1.2.840.113527.

oid-info.com states that new registrations are made under the 2.16.840.1

arc.

The numbers registered within the USA country node 1.2.840 begin at

113527. This apparently random number was chosen

by Jack Veenstra, chairman of the US registration authority

committee, to avoid special status being granted to organisations lucky

enough to get the lowest numbers. Meanwhile, in 1991, the

standards organisations invalidated the use of 1.2.840, and require

country registrars to use the arc 2.16 in preference. This is unfortunate,

as many of the OIDs in active use had already been defined in the 1.2.840

arc.

Examples:

2.16.840.1.113531 - Control Data Corporation

2.16.840.1.113564 - Eastman Kodak Company

2.16.840.1.113730 - Netscape Communications Corp.

2.16.840.1.113735 - BMC Software, Inc

Binary encoding of the abstract notation

Before the artefacts described by ASN.1 can be used in real computer

system, they have to be efficiently encoded into computer-readable binary

data. There are a number of different ways of doing this. BER (Basic

Encoding Rules) is the simplest, but leaves some of the encodings

ambiguous. DER (Distinguished Encoding Rules) is similar to BER, but

removes the ambiguities. PER (Packed Encoding Rules) produces a more

compact notation than DER. XER (XML Encoding Rules) specifies an encoding

of ASN.1 into XML (Extensible Markup Language).

Only BER and DER are described here, as they are the encodings used by

SSL. The formal specification of BER and DER is available as

ITU-T Recommendation X.690.

When it comes to encoding a specification expressed in ASN.1, the

approach is to use a TLV encoding (Type, Length, Value). The basic

encoding rules (BER) describe precisely how the bits should be laid out in

each of the type, length, and value components. The encoding combines

compactness with extensibility.

The data type referred to in ASN.1 as an OCTET is meant to be a

representation of a sequence of eight bits, which is nowadays known as a

byte. Perhaps uniquely in this specification, the bits in an octet are

numbered 1 to 8 from right to left. (This is the different from the

convention that I have always been used to in my career at IBM, where bits

are numbered 0-7 from left to right. This 0-7 convention [MSB0] is also

used in RFC1166. The 7-0 convention [LSB0] is typically used in little-endian

systems, where the bit number represents the power of two that it

encodes.)

Each part of the TLV (Type, Length, Value) has its own BER encoding.

BER-encoding of Type

The type segment is the start of the binary TLV encoding. It contains a

tag number that represents the type's value. Its first or only byte

(octet) is partitioned into three subfields of two, one, and five bits:

┌────┬───┬───────┐

│ xx │ x │ xxxxx │

└────┴───┴───────┘

87 6 54321

The bits are conventionally numbered 1-8 from right to left.

Each type has an associated class, represented by the first two

bits (8-7). The classes are

00 Universal

01 Application

10 Context-specific

11 Private

The class is effectively a namespace in which the tag number has meaning.

- Universal

- the type is part of the ASN.1/BER architecture itself.

- Application

- the type is unique within a specific application.

- Context-specific

- the meaning of the type depends on the context in which it is used.

- Private

- the type is being used in a private specification.

Bit 6 is the P/C (primitive/constructed) flag. A value of 1 means

that the type is a constructed one, and that the later value field (V) is

built up out of a series of more TLV components A value of 0 means

that the type is primitive, and does not contain any further TLV

components.

Bits 5-1 are the binary representation of the actual tag number,

except where they are equal to 11111, which is an indicator that the tag

number is continued in subsequent bytes. The subsequent bytes then contain

the tag number encoded seven bits at a time, with the high-order bit in

each byte (bit 8) signalling that the tag number is continued into further

subsequent bytes.

The tag numbers in the Universal class, which form part of the ASN.1/BER

architecture, are as follows (in hexadecimal):

| Hex |

Name |

Hex |

Name |

Hex |

Name |

| 01 |

BOOLEAN |

0B |

EMBEDDED PDV |

15 |

VideotexString |

| 02 |

INTEGER |

0C |

UTF8String |

16 |

IA5String |

| 03 |

BIT STRING |

0D |

RELATIVE-OID |

17 |

UTCTime |

| 04 |

OCTET STRING |

0E |

TIME |

18 |

GeneralizedTime |

| 05 |

NULL |

0F |

[unused] |

19 |

GraphicString |

| 06 |

OBJECT IDENTIFIER |

10 |

SEQUENCE or SEQUENCE OF |

1A |

VisibleString |

| 07 |

ObjectDescriptor |

11 |

SET or SET OF |

1B |

GeneralString |

| 08 |

EXTERNAL |

12 |

NumericString |

1C |

UniversalString |

| 09 |

REAL |

13 |

PrintableString |

1D |

CHARACTER STRING |

| 0A |

ENUMERATED |

14 |

T61String |

1E |

BMPString |

The tag numbers in the table above are the default numbers for

types in the Universal class. It is also possible to override the default

number for a tag by prefixing the type with a specific number enclosed in

square brackets. When this is done, the default class for the tag becomes

Context-specific

, unless the tag's class is also specified. The

following examples show various encodings for a field named condition,

which is of type NULL:

| ASN.1 specification |

Hex |

Explanation |

| condition NULL |

05 |

The default encoding for NULL in the Universal class. |

| condition [7] NULL |

87 |

Encoding changed to 7 in the Context-specific class. |

| condition [APPLICATION 7] NULL |

47 |

Encoding changed to 7 in the Application class. |

It is necessary to change the encoding of tag whenever an ambiguity may

arise. This can happen when an element may be one of a number of

possibilities in a CHOICE construction, or where there are one more

OPTIONAL fields, whose presence or absence must be signalled by a unique

tag number.

As a further complication, if the keyword EXPLICIT follows the bracketed

tag number, it means that the new tag number (the one in brackets) must be

used in an external wrapper

TLV structure that contains the

original tag number in an inner TLV structure.

BER-encoding of length

The length segment of the binary TLV encoding is represents the length of

the subsequent value segment in bytes (octets). There are three forms

defined for the length:

- Short form

- When the length is less that 128, the length can be encoded as a

single binary value in bits 7 to 1, and the high order bit (bit 8) is

set to zero.

┌───┬─────────┐

│ 0 │ xxxxxxx │

└───┴─────────┘

8 7654321

- Long form

- For any length (but usually for those greater then 127) the length is

first encoded into a binary number that is right aligned and filled to

the left with zeroes to make a bit string that is a multiple of eight

bits. The bit string is then prefixed with a single byte in which the

high-order bit is set to one, and bits 7-1 are set to the number of bytes

in the bit string.

┌───┬─────────┐ ┌──────────┐ ┌──────────┐ ┌──────────┐ ┌──────────┐

│ 1 │ nnnnnnn │ │ xxxxxxxx │ │ xxxxxxxx │ │ xxxxxxxx │ ... │ xxxxxxxx │

└───┴─────────┘ └──────────┘ └──────────┘ └──────────┘ └──────────┘

8 7654321 87654321 87654321 87654321 87654321

<-------------------- nnnnnnn bytes -------------------->

The value of nnnnnnn must lie between 1 and 126: that is,

it cannot be all zeroes or all ones. This is not a significant

constraint: it allows lengths up to 21008−1 to be

specified.

- Indefinite form

- For composite types only, the length may be set to 10000000. This is

followed by an arbitrary number of TLV constructs, the end of which is

signalled by two bytes of zeroes. The length is found by searching for

the two zeroes.

┌───┬─────────┐ ┌──────────┐ ┌──────────┐ ┌──────────┐ ┌──────────┐ ┌──────────┐

│ 1 │ 0000000 │ │ TLV item │ │ TLV item │ ... │ TLV item │ │ 00000000 │ │ 00000000 │

└───┴─────────┘ └──────────┘ └──────────┘ └──────────┘ └──────────┘ └──────────┘

8 7654321 87654321 87654321

Perhaps you need to be an old-time software developer to recognise just how exquisite the BER length encoding is.

It is remarkably compact for short lengths (a single byte),

yet is hugely extensible to numbers far in excess of anything that could possibly be required in everyday use.

BER-encoding of value

The binary encoding the value segment of a TLV construct depends on its implied type.

Most of the encoding are obvious or trivial, except for OBJECT IDENTIFIER.

Here are descriptions of some of them.

- BOOLEAN

- A boolean value is encoded as hexadecimal 00 for false, and anything

else for true.

- INTEGER

- An integer value is encoded as binary number, right aligned in a bit

string that is a multiple of eight bits. Negative numbers are encoded in

twos complement form so that the high-order bit is 1.

- ENUMERATED

- In this architecture, an enumerated value can be positive or negative,

so it is encoded in the same way as an INTEGER.

- BIT STRING

- A generalised bit string can contain any number of bits, not

necessarily a multiple of eight bits. But TLV values must always contain

an integral number of bytes. To code this an arbitrary length bit string

is padded out on the right to a multiple of eight bits, and

then prefixed on the left with a single byte that contains the

number of padding bits that have been added. For example, a string of

three one bits, 111, can be encoded as 00000101,11100000, or hexadecimal

05E0x. where the 05x shows that five padding bits have been added. The

BER encoding does not specify what padding bits should be used.

- OBJECT IDENTIFIER

- The OBJECT IDENTIFIER type is an encoding

of the sequence of positive integers occurring on a specific arc within

the object-id tree. However, a more compact notation than the SEQUENCE

OF INTEGER TLV encoding is used. The binary representation of

each integer in the sequence is split into segments of seven bits each,

padded with zero bits on the left if necessary. Then a 1 bit is prefixed

to each seven-bit segment except the last, which is prefixed with a 0

bit. For example, 3166 is hexadecimal C5E, or binary 1100,0101,1110.

Padding on the left and splitting into seven-bit segments gives

0011000,1011110. Prefixing the non-last segment with 1 and the last

segment with 0 gives 10011000,01011110, which is 985Ex. This encoding

has the benefit that an integer less than 128 can be encoded in a single

byte with its usual representation.

As a further optimisation, the first two integers in each sequence are

merged into one by calculating the value 40×i1+i2,

where i1 and i2 are the

first two numbers in the sequence. This means that 40 is added to the

second integer in each iso sequence, and 80 is added to the

second integer in each joint-iso-itu-t sequence, and then the

initial integer is suppressed in all three branches. Why is the

multiplier 40? Probably because it is just less than one third of 127,

so the three ranges can all have their first and second integers encoded

into a single byte, so long as there are less than 40 branches at each

of the nodes. In fact, the 0 and 1 nodes can never have

more than 40 branches, or the encodings would overlap. (If the

denouement of The Hitchhiker's Guide to the Galaxy had

been more widely known when BER was being defined, the multiplier might

well have been 42.)

- NULL

- The NULL type is used to specify an

element whose presence is required, but whose value is not required. As

it is empty, the V part of TLV is absent, and the L part is zero. NULL

is therefore encoded as 0500x

- UTCTime

- This is the first of the three time formats in ASN.1. This one encodes

a date and time incorporating a two digit year, even though it was

specified in 1982, when the imminent Y2K problem was already well

understood. It can be encoded as a ASCII character string in one of the

following formats:

yymmddhhmmZ

yymmddhhmmssZ

yymmddhhmm+hhmm

yymmddhhmm-hhmm

yymmddhhmmss+hhmm

yymmddhhmmss-hhmm

where yymmdd is a date in year, month, date order, and hhmmss

is a time in hour, minute, second format. The suffix Z indicates strict

UTC time (equivalent to the Greenwich Mean Time time zone), and the

suffix ±hhmm specifies a time offset in hours and

minutes to specify that the encoded time is in a different time zone. If

the offset is present, the encoded time is a local time, and the offset

is the signed amount by which the local time is ahead of UTC. So the UTC

time is obtained by subtracting the offset from the specified local

time. An offset with a minus sign is added to the local time specified,

and an offset with a plus sign is subtracted.

- GeneralizedTime

- This is the second of the three time formats. It is nominally based on

the ISO standard 8601, which specifies a four digit year, and allows the

addition of fractional seconds. But unlike ISO8601, no delimiters are

allowed in the date/time representation. A single decimal fraction

delimiter is allowed, which is either a comma or a period (full stop).

The decimal fraction normally appears after the

ss seconds

component, but can appear after mm minutes or hh

hours instead. The time zone offset is also allowed to specify only hh

hours, or to be omitted altogether, in which the time is interpreted as

a local time only. The following are all examples of valid encodings

(from X.680)

19851106210627.3

19851106210627.3Z

19851106210627.3-0500

198511062106.456

1985110621.14159

- TIME

- This is a fairly recent time format that is specifically meant to

mirror ISO 8601. It has been subtyped into a wide variety of other

useful time and date types. However, it has not been incorporated into

any of the entities used by SSL, so it is not discussed further.

- Character string types

- There are a dozen different character string types. The only basic

difference between them is the range of characters (the alphabet, or

character set) that can be used to encode them. The common character

string types and their character sets are:

- NumericString

- A string composed only of the ASCII numeric digits, 0-9, plus

space. (11 characters.)

- PrintableString

- A string composed only of ASCII letters, numbers, and punctuation:

A-Z, a-z, 0-9, ' ( ) + - / : = ? . , plus space. Notice that @ and

currency symbols are not included. (74 characters.)

- VisibleString

- A string composed only of the ASCII printing characters, plus

space, and excluding the control characters. It is defined by the

ISO_IR 6 character set: A-Z, a-z, 0-9, ! " # $ % & ' ( ) * + , -

. / : ; < = > ? @ [ ] \ ^ _ ` { } | ~ plus space. (95

characters.)

- IA5String

- A string composed of any of the US-ASCII characters, including

DEL, space, and the control characters. (IA5 means

International

Alphabet 5

.) (128 characters.)

- UniversalString

- A string composed of 32-bit Unicode characters. (Potentially 4

billion characters, but many are not yet defined.)

- UTF8String

- A string encoded in the variable length character format UTF-8. It

includes the same characters a UniversalString, but usually

encoded

more compactly. (Also potentially 4 billion characters.)

- There are several other named character string types, but they are

obsolete and not widely used.

DER – the Distinguished Encoding Rules

The Basic Encoding Rules described above allow a certain amount of

flexibilty in the binary representation of the abstract specification. The

Distinguished Encoding Rules tighten up the ambiguities to that the

encoding can only be done in one way. This leads to the following changes:

- In the length section of the TLV encoding, the shortest possible

encoding must be used. For example, if the length is less than 127, the

short form must be used. For longer lengths, the long form is used, but

the minimum number of bytes must be used. The indefinite length form

must not be used.

- In the boolean type, FF must be used for the true

value. (BER allows any non-zero value.)

- In an INTEGER, the shortest possible encoding must be used.

The sign bit, either 0 or 1, must not be extended into superfluous high-order bytes.

So FFx encodes −1, and 00FFx encodes 255, but FFFFx and 0000FFx are both invalid.

In

Larmouth's book, this rule is expressed by the statement that

the

top nine bits must not be the same

.

(I am assuming that this restriction is a DER rule, and not BER one.

Larmouth is a bit ambiguous about this, and I have not gone back to the formal specification

to check.)

- An ENUMERATED type is encoded in exactly the same way as an INTEGER, so the same rules apply:

the top nine bits must not be the same.

- In a BIT STRING, the padding bits must always be zeroes.

- In a GeneralizedTime, the decimal separator must be a full stop (period).

- In a SET or SET OF construction, BER allows the members to be in any

order, but DER requires the elements to be sorted. This contradicts the

normal mathematical definition of a set, which says specifically that

members are not ordered; but there is no other simple way to ensure that

two identical sets are indeed identical when encoded. This difficulty

hints that a SET is not really a very good primitive to use in ASN.1,

and indeed it is never used in practice.

Containers used in SSL

SSL uses raw binary types such as encryption keys and signatures. It

should not come as a surprise, having ploughed through the huge

description of ASN.1 and its encodings above, that these binary types are

enclosed in ASN.1 containers, which are encoded using the Distinguished

Encoding Rules (DER).

The RSA encryption containers

As described earlier, the RSA algorithms specifies a public key and a

private key. The ASN.1 specification for these is contained in

RFC2437.

The public key is just a sequence of two integers.

RSAPublicKey::=SEQUENCE{

modulus INTEGER, -- n

publicExponent INTEGER -- e }

The private key contains much more structure than the public key.

RSAPrivateKey ::= SEQUENCE {

version Version,

modulus INTEGER, -- n

publicExponent INTEGER, -- e

privateExponent INTEGER, -- d

prime1 INTEGER, -- p

prime2 INTEGER, -- q

exponent1 INTEGER, -- d mod (p-1)

exponent2 INTEGER, -- d mod (q-1)

coefficient INTEGER -- (inverse of q) mod p }

Version ::= INTEGER

Although in principle it only needs to contain the modulus and the

private exponent, in practice the private key structure contains

additional information to assist in the decryption calculation. These

requirements are contained in

Fast decipherment algorithm for RSA public-key cryptosystem, and

include p and q, the factors of n. Any

of these hints and intermediate results would compromise the key, but it

is safe to store them in the private key structure because the entire

structure has to be kept secret.

Certificates

A major construct that is used extensively in SSL is a certificate.

This is a container for a public key, which is granted to a named subject,

and digitally signed by a trusted named issuer. The issuer assigns a

unique serial number to each certificate it issues.

An X.509 certificate is described in RFC5280.

It consists of a TBS

certificate, a signature algorithm, and a

signature. The meaning of TBS

is not specifically defined, but the

context implies a meaning of to be signed

. Obviously, the signed

part of the certificate cannot include the signature itself, so the

separation is a natural one:

Certificate ::= SEQUENCE {

tbsCertificate TBSCertificate,

signatureAlgorithm AlgorithmIdentifier,

signatureValue BIT STRING }

TBSCertificate ::= SEQUENCE {

version [0] EXPLICIT Version DEFAULT v1,

serialNumber CertificateSerialNumber,

signature AlgorithmIdentifier,

issuer Name,

validity Validity,

subject Name,

subjectPublicKeyInfo SubjectPublicKeyInfo,

issuerUniqueID [1] IMPLICIT UniqueIdentifier OPTIONAL,

-- If present, version MUST be v2 or v3

subjectUniqueID [2] IMPLICIT UniqueIdentifier OPTIONAL,

-- If present, version MUST be v2 or v3

extensions [3] EXPLICIT Extensions OPTIONAL

-- If present, version MUST be v3

}

Version ::= INTEGER { v1(0), v2(1), v3(2) }

CertificateSerialNumber ::= INTEGER

Validity ::= SEQUENCE {

notBefore Time,

notAfter Time }

Time ::= CHOICE {

utcTime UTCTime,

generalTime GeneralizedTime }

UniqueIdentifier ::= BIT STRING

SubjectPublicKeyInfo ::= SEQUENCE {

algorithm AlgorithmIdentifier,

subjectPublicKey BIT STRING }

Extensions ::= SEQUENCE SIZE (1..MAX) OF Extension

Extension ::= SEQUENCE {

extnID OBJECT IDENTIFIER,

critical BOOLEAN DEFAULT FALSE,

extnValue OCTET STRING

-- contains the DER encoding of an ASN.1 value

-- corresponding to the extension type identified

-- by extnID

}

I will not describe every single field in the certificate, but will

highlight some of them.

The certificate contains two names, one of which is the issuer

and the other is the subject. Each of them is a

Distinguished Name (DN), which is a sequence of Relative Distinguished

Names (RDNs), each of which is a type/value pair:

Name ::= CHOICE { -- only one possibility for now --

rdnSequence RDNSequence }

RDNSequence ::= SEQUENCE OF RelativeDistinguishedName

RelativeDistinguishedName ::=

SET SIZE (1..MAX) OF AttributeTypeAndValue

AttributeTypeAndValue ::= SEQUENCE {

type AttributeType,

value AttributeValue }

AttributeType ::= OBJECT IDENTIFIER

AttributeValue ::= ANY -- DEFINED BY AttributeType

DirectoryString ::= CHOICE {

teletexString TeletexString (SIZE (1..MAX)),

printableString PrintableString (SIZE (1..MAX)),

universalString UniversalString (SIZE (1..MAX)),

utf8String UTF8String (SIZE (1..MAX)),

bmpString BMPString (SIZE (1..MAX)) }

The ASN.1 syntax does not specify the format of the value

in the RDN, except to hint that it should be a DirectoryString.

The supporting text in RFC5280 says that new certificates should only use

PrintableString or UTF8String encodings. In

real life, Distinguished Names are specified as a comma-separated list of

character RDNs, like CN=xxxx,O=xxxx,OU=xxxx, but within the certificate

the RDNs are actually DER-encoded OBJECT IDENTIFIERs. The preferred

textual form of a distinguished name id defined in RFC1779.

As an aside, distinguished names and RDNs are widely used in the LDAP

protocol, as every object in an LDAP directory is identified by a DN.

The certificate definition includes two optional UniqueIdentifier

fields, which were supposed to disambiguate reused names. They was never

used, and are now deprecated.

Certificates conforming to this specification contain time values. The

ASN.1 syntax implies that there is a choice of format, but RFC5280 states

that the time must be encoded with a two digit year (UTCTime)

until the end of 2049, and with a four digit year (GeneralizedTime)

from 2050. This does seem like a long time to retain an encoding that was

obsolete even when it was invented. It is to be hoped that a later version

of this RFC will bring that conversion date a little closer.

The signature part of the certificate contains an algorithm identifier,

which itself contains two parts:

AlgorithmIdentifier ::= SEQUENCE {

algorithm OBJECT IDENTIFIER,

parameters ANY DEFINED BY algorithm OPTIONAL }

The algorithm referred to is the signature algorithm. Recall that a digital

signature is a digest followed by an encryption, so the algorithm must

identify both parts.

There is a list of a few signature OIDs in RFC3279,

but it is now effectively superseded. The OIDs were originally specified as

references to section numbers in public-key cryptography standards (PKCS)

published by RSA Data Security Inc, a software security company founded by

the inventors of the RSA algorithms. It is now RSA Security LLC, a division

of the EMC corporation. This means that the PKCS documents are now held on

the EMC website, and the PKCS#1 documentation is at

PKCS

#1: RSA Cryptography Standard. From there we can obtain the following

signature OIDs, which are in the deprecated 1.2.840 arc:

pkcs-1 OBJECT IDENTIFIER ::= {iso(1) member-body(2) us(840) rsadsi(113549) pkcs(1) 1}

md2WithRSAEncryption OBJECT IDENTIFIER ::= { pkcs-1 2 }

md5WithRSAEncryption OBJECT IDENTIFIER ::= { pkcs-1 4 }

sha1WithRSAEncryption OBJECT IDENTIFIER ::= { pkcs-1 5 }

sha224WithRSAEncryption OBJECT IDENTIFIER ::= { pkcs-1 14 }

sha256WithRSAEncryption OBJECT IDENTIFIER ::= { pkcs-1 11 }

sha384WithRSAEncryption OBJECT IDENTIFIER ::= { pkcs-1 12 }

sha512WithRSAEncryption OBJECT IDENTIFIER ::= { pkcs-1 13 }

sha512-224WithRSAEncryption OBJECT IDENTIFIER ::= { pkcs-1 15 }

sha512-256WithRSAEncryption OBJECT IDENTIFIER ::= { pkcs-1 16 }

When the OIDs for these algorithms are used in the AlgorithmIdentifier

sequence, the parameters element must be present, and it must

be equal to NULL. Note that the MD2 and MD5

digest algorithms are obsolete and no longer used. SHA-1 is still widely

used, even though it has been deprecated. SHA-256 is slowly coming into use.

Trusting certificates

A certificate is constructed in such a way that it can be trusted.

The trust arises because of the digital signature that is included within the certificate.

Recall that a digital signature is created by using asymmetric encryption, with a private key,

of the digest of a piece of data – in this case, the rest of the certificate.

The signature is validated by decrypting it with the corresponding public key.

The digitally signed piece of data can be trusted

as far as the owner of the private key can be trusted.

To produce this level of trust, a number of public organisations called

certificate authorities have arisen.

A certificate authority has a single purpose: to create trusted digital signatures within certificates.

The certificate authority is identified by the issuer distinguished name in the certificate,

as described above.

The other distinguished name in the certificate, the subject, is the user of the certificate.

The trust relationship between the issuer and the subject is that

the issuer asserts that the subject is who they say they are,

and confirms the association between the subject and the distinguished name that represents it.

To cement this relationship, a fee is usually passed from the subject to the issuer.

The actual mechanics of the process is that the subject creates a file called a certificate request

.

This contains all the fields that the subject wants the certificate to contain,

but lacking the issuer's name and signature. The request is then sent to the issuer for signing.

When the issuer (the certificate authority) has confirmed the identity and validity of the subject's

distinguished name (and accepted the appropriate fee, if necessary),

it inserts the issuer's name and signature into the subject certificate to produce a signed certificate.

This binds the subject's distinguished name inexorably to the issuer's distinguished name,

along with the serial number and the range of dates for the validity.

If the subject attempts to change any of these attributes, by as much as a single bit,

the signature will fail to validate, and the certificate becomes useless.

Whenever the subject's signed certificate is presented to another user, such as a client using a web browser,

the client must validate the signature in the subject certificate. To do this, the client must know the

public key of the certificate authority, corresponding to the private key used to produce the signature.

This public key is itself contained within another certificate, called the certificate authority certificate,

or more simply just the signing certificate.

How can the certificate authority certificate be trusted? In some cases,

the trust in this certificate is obtained by having it signed by yet another certificate authority.

In fact, sometimes a whole chain of signing certificates is built, with each certificate in the chain depending

on the signature of a higher authority

.

However, this trust chain cannot go on for ever, and eventually a top

certificate is reached,

which is called a self-signed certificate, in which the the subject and issuer distinguished names are identical.

The certificate is still signed, but the signature really says nothing about the trustworthiness of the certificate,

because it is produced by the subject of the certificate, rather than an independent authority.

How can a self-signed certificate be trusted?

The answer to this question depends entirely on the context in which the trust is required.

In the most familiar case, when SSL is being used in a web browser, the browser itself provides the context.

Each browser is shipped with a list of trusted certificate authorities, which you get for free when you install

the browser software.

What this means in practice is that

the browser manufacturer is trusting a certain set of certificate authorities

on your behalf.

This may be regarded as a good thing or a bad thing: it means that you do not need to get into the minutiae

of the trustworthiness of certain certificate authorities, but it also means that you have lost the ultimate

control over the choice of those certificates.

In the early days of SSL deployment, there were only a few certificate authorities.

At the latest count there are over 300.

Within a private network, it is quite feasible to become a private certificate authority,

and to sign all your own certificates. To express your trust in your own certificate authority,

you must install the self-signed certificate of your private certificate authority into the trusted certificate store

of all the browsers that may use it.

This presumes that all the client browsers can be reconfigured under your own control.

Revoking certificates

Certificates become expired when their notAfter date is reached.

Sometimes a certificate may need to be invalidated before the expiry date is reached, however.

The issuer cannot go to the subject's site and physically remove the certificate, so a less reliable process has to be used.

There are two such processes.

- Periodically, each certificate authority publishes a certificate revocation list (CRL),

which contains the serial numbers of all the certificates that have been revoked, but not yet expired.

It is a requirement that a process that validates a certificate should look in the most current

revocation list to find out whether the certificate has been revoked.

It is not expected that the validator should obtain the list from the certificate authority's site for every request.

Instead, the CRL is cached locally, and only the local copy is referenced.

Delays in publishing the CRL, and delays in downloading the cached copy, can mean that the validator may be

unaware of revoked certificates for up to a week.

A more dynamic process called the Online Certificate Status Protocol

(OCSP) was invented to circumvent the delays implicit with CRLs.

Using this protocol, the validator does indeed contact an external site called an OCSP responder,

supplying the serial number of the certificate to be validated.

The responder can be the certificate authority itself, or a suitably delegated response server.

The responder replies with a signed response that indicates the current revocation state of the specified certificate.

The main drawback with OCSP is that the client has to contact yet another server during the validation process,

leading to performance and privacy concerns.

An enhancement to the original OCSP protocol is the stapled OCSP request.

In this protocol it is the server, rather than the client, who contacts the OCSP responder.

The server then attaches (or staples

) the OCSP response to the certificate to confirm that it is not revoked.

The stapled response is timestamped and signed by the OCSP responder,

so that the client can confirm that the OCSP response is trusted and is reasonably current.

However, none of these revocation systems is totally satisfactory, as discussed in

Solving The SSL Certificate-Revocation Checking Shortfall. It is even claimed that

certificate revocation doesn't work in practice,

because many browsers do not even perform the revocation check (at least, in 2013).

Creating the artefacts

Before you can embark on providing an SSL service, you have to create the

artefacts needed to control the service. These are just:

- A private key.

- The corresponding public key, wrapped up in a certificate.

If you want to have your certificate signed by an external certificate

authority, you will also need:

- A certificate request file.

If you want to sign your own certificates, you will need to create:

- A private certificate authority certificate.

If you want to communicate with a server that requires you to provide client

authentication, you will need:

- A client certificate

Although there are proprietary platform-specific tools to do all of these,

the most widely used set of tools available is OpenSSL,

an open-source package that is available on UNIX and Windows platforms. It

was first created by the Australian Eric Andrew Young in 1995, and was

originally known as SSLeay. Young and his co-developer Tim Hudson stopped

work on SSLeay when they moved to RSA Security at the end of 1998, and the

open-source community began developing OpenSSL at the same time. A new fork

of OpenSSL, called LibreSSL,

was begun in 2014 to rectify some problems.

Yet another encoding method: PEM – Privacy Enhanced Mail

Although the native encoding method for the SSL artefacts is DER

(Distinguished Encoding Rules), the OpenSSL tool prefers to produce its

output in a format known a PEM (Privacy Enhanced Mail). As its name

implies, Privacy Enhanced Mail was originally designed as an

infrastructure for encrypting email, but it never took off as a standard,

and has been superseded by Pretty Good Privacy (PGP).

All that now remains of the PEM infrastructure is the file format also

known as PEM.

DER-encoded objects contain binary data which is generally not

displayable or printable. PEM encoding uses base-64

notation to convert the binary DER objects into textual form, which

can be displayed or printed, or quoted directly in emails.

If you are familiar with hexadecimal encoding, you will know that it

encodes every four bits in a binary string into one of the characters from

the 16-character alphabet 0123456789ABCDEF. Base-64 encoding

is similar, but instead it encodes every six bits into one of the

characters from the 64-character alphabet

ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/.

For instance, 000000 encodes as A, 011010

encodes as a, and 111111 encodes as /.

Unlike hexadecimal encoding, the case of the letters in base-64 encoding

is significant. If the string to be encoded is not a multiple of 24 bits

then it is padded out with zero bits, and any complete six-bit padding

units are encoded as equals signs (=). Therefore, a base-64

encoded message is always a multiple of four bytes. There is an online

hexadecimal to base-64 converter at tomeko.net.

To produce a PEM-encoded file, the base-64 data is split into 64-byte

lines, and delimited with lines containing ASCII strings, called

encapsulation boundaries. In the formal

PEM specification, these delimiters are: -----BEGIN

PRIVACY-ENHANCED MESSAGE----- and -----END

PRIVACY-ENHANCED MESSAGE-----, but in the format produced by

OpenSSL the

delimiters

used are -----BEGIN type----- and -----END type-----,

where type can be one of:

CERTIFICATECERTIFICATE REQUESTENCRYPTED PRIVATE KEYRSA PRIVATE KEYX509 CRL

Each of the encapsulation boundaries must start and end with exactly five

hyphens.

As most ASN.1 objects are a SEQUENCE with a long form length, they nearly

always begin with hexadecimal 3082, for which the base-64 encoding begins

with MII.

The openssl toolset

The openssl tool is a set of command line functions for managing keys and

certificates. The primary openssl command contains many subcommands to create SSL artefacts such as keys and certificates.

There are sample commands at: madboa.com.

Some examples of the more useful subcommands are the following:

- Create a self-signed CA certificate valid for 3653 days (10 years)

and save the corresponding private key.

req -verbose -x509 -nodes -days 3653 -newkey rsa:2048 -keyout PrivateCA.key -out PrivateCA.pem

- Convert the PEM-encoded CA certificate to DER-encoded.

This

.crt file type was originally expected by Netscape to be DER-encoded,

but popular usage now also accepts such files being PEM-encoded.

(See

DER vs. CRT vs. CER vs. PEM certificates and how to convert them.)

In fact, it seems that Android actually requires PEM-encoded CA certificates.

x509 -inform PEM -in PrivateCA.pem -outform DER -out PrivateCA.crt

- Create a certificate request for a server certificate that is

valid for one year:

req -verbose -new -nodes -days 365 -newkey rsa:2048 -keyout ServerCert.key -out ServerReq.pem

This command will prompt you for the elements of a distinguished

name

of your server. You must specify the hostname

for your server in the Common Name section of the distinguished

name.

- Sign the request file with the private key for the CA that you

saved earlier. This creates a server certificate signed by your

private CA. You will be prompted to confirm (with a y) that you wish

to sign and then commit the certificate. On completion, the new

certificate will be saved in

/etc/ssl/newcerts, as

well as the file specified by -out.

ca -verbose -in ServerReq.pem -out ServerCert.pem -cert PrivateCA.pem -keyfile PrivateCA.key

- Export the signed server certificate as a PKCS12 password

protected file. You will be prompted for an

Export Password

,

which will be used to encrypt the file while it is being transported

to another system.

pkcs12 -export -in ServerCert.pem -inkey ServerCert.key -out ServerCert.p12

- To terminate OpenSSL subcommand mode, type the exit

command.

openssl dhparam is used to generate a Diffie-Hellman prime

and generator pair (p and g).

Finally, the SSL protocol itself

Now I have explained encryption, digital signatures, ASN.1

specifications, DER encoding, private and public keys, certificates, and

openssl tooling, it's finally possible to describe the SSL protocol itself

(more properly known as TLS

).

The SSL protocol was originally invented by the Netscape Corporation as a

way of providing secure browsing in their web browser. The Netscape

Corporation was absorbed into AOL, which no longer preserves Netscape's

original SSL documentation, but it has been captured by the

Wayback Machine.

As I explained earlier, symmetric-key encryption is considerably more

efficient than public key encryption. So, for encrypting bulk data

transfer with high performance, symmetric-key encryption is a must. But to

use it, both partners in the conversation must know a single shared secret

key. But how do you share a secret key with a partner that you have never

communicated with before? This is known as the key exchange problem. An

early solution to this problem was for a trusted courier to carry the key,

physically locked in a secure container, from one location to another.

This is highly secure, but expensive and inconvenient, and hardly

practical for electronic commerce. Furthermore, the same key is used for

encrypting all traffic, which somewhat simplifies the possibility of an

attacker breaking the key.

SSL, in brief, is a solution to the key exchange problem that is suitable

for electronic communication.

The two partners in the conversation must be identified as the client and

the server as their roles are different: the conversation is not

symmetric. The conversation is initiated by the client, who provides a

list of suggested encryption techniques. The server responds with a

certificate containing the server's public key, and an encryption

technique that is acceptable to the client. The client validates the

server's certificate, and uses the public key within it to encrypt a

random string called the pre-master-secret, which it sends to the server.

The server uses its private key to decrypt the pre-master-secret. At this

point, the key exchange problem is solved: the client and server can both

use the pre-master-secret to generate the key required by the mutually

chosen encryption technique.

Both the client and server now possess a mutually chosen encryption

algorithm and a key to use with it. They are now in a position to exchange

secret encrypted messages using fast symmetric-key encryption, using a

shared key that has never appeared in plain text in the conversation.

Furthermore, a different key is used for each conversation, limiting the

opportunity for an attacker to break it.

The summary above is only a brief overview. The actual conversation is,

of course, more detailed.

Specification of the encryption algorithm

As the primary purpose of SSL is to negotiate and then use a

symmetric-key algorithm, you would expect the specification of such an

algorithm to be at the centre of the protocol, and this is indeed the

case.

The encryption algorithm is bundled together with a key length (which may

be implicit), a key exchange algorithm, and a message authentication code

algorithm (MAC), to produce an entity called a cipher suite number (16 bits).

The cipher suite numbers are allocated somewhat arbitrarily, and are

assigned symbolic names. Only the names give any clue to the components.

Examples of some of the standardised cipher suite names are

shown below. The full list is in the

IANA TLS Cipher Suite Registry.

| Hex value |

Standardised cipher suite name |

| 0000x |

TLS_NULL_WITH_NULL_NULL |

| 0001x |

TLS_RSA_WITH_NULL_MD5 |

| 0002x |

TLS_RSA_WITH_NULL_SHA |

| 0003x |

TLS_RSA_EXPORT_WITH_RC4_40_MD5 |

| 0004x |

TLS_RSA_WITH_RC4_128_MD5 |

| 0005x |

TLS_RSA_WITH_RC4_128_SHA |

| 0006x |

TLS_RSA_EXPORT_WITH_RC2_CBC_40_MD5 |

| 0007x |

TLS_RSA_WITH_IDEA_CBC_SHA |

| 0008x |

TLS_RSA_EXPORT_WITH_DES40_CBC_SHA |

| 0009x |

TLS_RSA_WITH_DES_CBC_SHA |

| 000Ax |

TLS_RSA_WITH_3DES_EDE_CBC_SHA |

| 000Bx |

TLS_DH_DSS_EXPORT_WITH_DES40_CBC_SHA |

| 000Cx |

TLS_DH_DSS_WITH_DES_CBC_SHA |

| 000Dx |

TLS_DH_DSS_WITH_3DES_EDE_CBC_SHA |

| 000Ex |

TLS_DH_RSA_EXPORT_WITH_DES40_CBC_SHA |

| 000Fx |

TLS_DH_RSA_WITH_DES_CBC_SHA |

| 0010x |

TLS_DH_RSA_WITH_3DES_EDE_CBC_SHA |

| C027x |

TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA256 |

| C028x |